DThree19

Is your deep detector safe? verification and certification of neural networks

CVPR Workshop, 17 June 2019, Long Beach, CA, US

SDD2019 is a half-day single track workshop at CVPR 2019. The workshop will be held on 17-June-2019 in Long Beach, CA, US. It is supported through ..

Timeliness

That this CVPR workshop is inter-disciplinary can be seen from the fact that not all speakers (and participants) would come from computer vision background. Also, we believe this workshop is extremely timely because of several factors such as:- Because of industrial application of deep networks, it has become imperative that safety guarantees are given. On the other hand, accidents with autonomous cars have brought safety of overall system into the centre of debate.

- Recent regulations and standards (especially ISO26262 updates, RAND report) have shed more light on why these networks need high level of certification (e.g., ASIL D certification for a pedestrian detection).

- Since the development of Reluplex and Planet, more avenues for scalable formal verification seem achievable for practical systems in foreseeable future.

- CVPR is a flagship conference of computer vision society. Most of the state-of-the-art deployment of vision-based systems in autonomous driving have originated here. Also, since a part of the strategy for certification is composing a parallel system with classical computer vision approaches, CVPR venue suits very well.

- Last but not the least, both academic contributions (such as Reluplex from David Dill's group) and industrial applications (such as several autonomous driving companies) have originated in California.

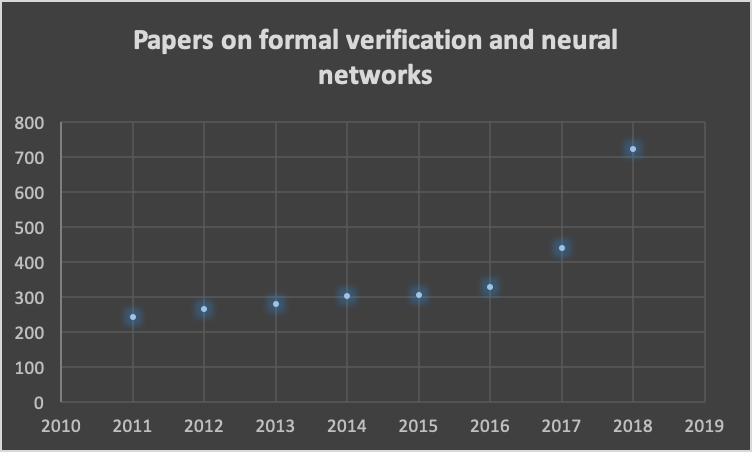

Trends based on Google Scholar

References

Here is a non-exhaustive list of relevent works and some surveys:

- Pulina, Luca, and Armando Tacchella. "An abstraction-refinement approach to verification of artificial neural networks." International Conference on Computer Aided Verification. Springer, Berlin, Heidelberg, 2010.

- Pulina, Luca, and Armando Tacchella. "Challenging SMT solvers to verify neural networks." AI Communications 25.2 (2012): 117-135.

- Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. "Imagenet classification with deep convolutional neural networks." Advances in neural information processing systems. 2012.

- Kwon, Kiseok, et al. "Co-design of deep neural nets and neural net accelerators for embedded vision applications." arXiv preprint arXiv:1804.10642 (2018).

- Shafiee, Mohammad Javad, et al. "SquishedNets: Squishing SqueezeNet further for edge device scenarios via deep evolutionary synthesis." arXiv preprint arXiv:1711.07459 (2017).

- Bastani, Osbert, et al. "Measuring neural net robustness with constraints." Advances in neural information processing systems (2016): 2613-2621.

- Hein, Matthias, and Maksym Andriushchenko. "Formal guarantees on the robustness of a classifier against adversarial manipulation." Advances in Neural Information Processing Systems (2017): 2266-2276.

- Ehlers, Ruediger. "Formal verification of piece-wise linear feed-forward neural networks." International Symposium on Automated Technology for Verification and Analysis. Springer, Cham, 2017.

- Papernot, Nicolas, et al. "Practical black-box attacks against machine learning." Proceedings of the 2017 ACM on Asia Conference on Computer and Communications Security. ACM, 2017.

- Tramèr, Florian, et al. "Ensemble adversarial training: Attacks and defenses." arXiv preprint arXiv:1705.07204 (2017).

- Gopinath, Divya, et al. "Deepsafe: A data-driven approach for checking adversarial robustness in neural networks." arXiv preprint arXiv:1710.00486 (2017).

- Huang, Xiaowei, et al. "Safety verification of deep neural networks." International Conference on Computer Aided Verification. Springer, Cham, 2017.

- Pei, Kexin, et al. "Deepxplore: Automated whitebox testing of deep learning systems." Proceedings of the 26th Symposium on Operating Systems Principles. ACM, 2017.

- Dutta, Souradeep, et al. "Output range analysis for deep neural networks." arXiv preprint arXiv:1709.09130 (2017).

- Dreossi, Tommaso, et al. "Systematic testing of convolutional neural networks for autonomous driving." arXiv preprint arXiv:1708.03309 (2017).

- Cheng, Chih-Hong, Georg Nührenberg, and Harald Ruess. "Maximum resilience of artificial neural networks." International Symposium on Automated Technology for Verification and Analysis. Springer, Cham, 2017.

- Carlini, Nicholas, and David Wagner. "Towards evaluating the robustness of neural networks." 2017 IEEE Symposium on Security and Privacy (SP). IEEE, 2017.

- Lu, Jiajun, et al. "No need to worry about adversarial examples in object detection in autonomous vehicles." arXiv preprint arXiv:1707.03501 (2017).

- Akhtar, Naveed, and Ajmal Mian. "Threat of adversarial attacks on deep learning in computer vision: A survey." arXiv preprint arXiv:1801.00553 (2018).

- Katz, Guy, et al. "Reluplex: An efficient SMT solver for verifying deep neural networks." International Conference on Computer Aided Verification. Springer, Cham, 2017.