Invited Speakers

- Prof. Sanjit A. Seshia, UC Berkeley, US

Title: Semantic Adversarial Deep Learning for Perception

Abstract : Adversarial analysis of deep neural networks has shown us that it is easy to find small perturbations of inputs that produce undesired outputs. However, not all adversarial inputs are meaningful. In this talk, I will describe how formal methods can be used to define the "semantic space" of inputs for a deep neural network used for perceptual tasks, to analyze the network for desired semantic properties, and to re-train the network to achieve improved performance. I will present two open-source tools, VerifAI and Scenic, that can help researchers and practitioners in machine learning, computer vision, and related areas to design and analyze deep neural networks in their semantic context.

Biography : Sanjit A. Seshia is a Professor in the Department of Electrical Engineering and Computer Sciences at the University of California, Berkeley. His research centers on the development of formal methods for system design, with a current focus on cyber-physical systems, computer security, robotics, and machine learning. He has helped pioneer the areas of satisfiability modulo theories (SMT) and formal inductive synthesis, and has co-authored a widely-used textbook on embedded, cyber-physical systems. More recently, he is leading a "verified artificial intelligence" effort, bringing formal methods to bear on AI/ML based systems.

- Prof. Alessio R. Lomuscio, Imperial College, UK

Title: Towards formally verifying CNN-based perception systems

Abstract : I will begin by introducing a notion of local transformational robustness for CNN-based perception systems. Differently from the often-used concept of local robustness, which is based on pixel perturbations, local transformational robustness refers to the invariance of classifications with respect to small affine and photometric transformations of a given image. I will then present a method to verify whether a CNN-based classifier is locally transformationally robust by recasting the problem to mixed integer linear programming. I will present an implementation and discuss the experimental results obtained for a CNN trained from the MNIST data set. (The talk is based on joint work with P. Kouvaros. Draft available at: ).

Biography : Alessio Lomuscio , PhD in 1999, is full Professor in the Department of Computing at Imperial College London (UK), where he leads the Verification of Autonomous Systems Group and serves as Deputy Director and Imperial lead for the UKRI Doctoral Training Centre in Safe and Trusted Artificial Intelligence. He is a Fellow of the European Association of Artificial Intelligence and currently holds a Royal Academy of Engineering Chair in Emerging Technologies. He held an EPSRC Leadership Fellowship between 2010 and 2015. Alessio's research interests concern the realisation of safe artificial intelligence. Since 2000 he has worked on the development of formal methods for the verification of autonomous systems and multi-agent systems. To this end, he has put forward several methods based on model checking and various forms abstraction to verify AI systems. A particular focus of Alessio's work has been the attention to support AI specifications, including those concerning the knowledge and strategic properties of the agents in their interactions. The methods and resulting toolkits have found applications in autonomous vehicles, robotics, and swarms. He has recently turned his attention to the verification of systems synthesised via machine learning methods. He has published over 150 papers in AI conferences (including AAMAS, IJCAI, KR, AAAI, ECAI), verification and formal methods conferences (CAV, SEFM), and international journals (AIJ, JAIR, JAAMAS).

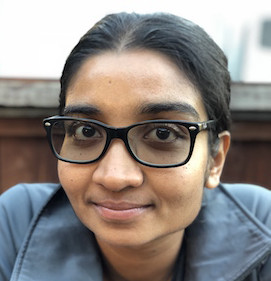

- Dr. Vasumathi Raman , Nuro, US

Title: How to safety in three simple steps

Abstract : Adversarial analysis of deep neural networks has shown us that it is easy to find small perturbations of inputs that pSafety is a loaded word, so let's not bother defining it. In this talk we argue for a simple, three-step approach to working on safety problems relevant to the autonomous vehicle industry. 1) Choose a specific undesirable behavior or hazard to address, including the subsystem it plagues, circumstances under which it happens, and whether the system can identify that it is happening (i.e., define the problem). 2) Clarify the desired system response, and create a plan for how the correct behavior can be verified or validated (i.e., write the unit tests). 3) Implement a solution that achieves the desired behavior. We will discuss some examples of this approach and associated challenges.

Biography : Vasu Raman works on prediction, behavior and motion planning at Nuro. She previously held positions at Zoox, United Technology Research Center, and Caltech. She holds a PhD in Computer Science from Cornell University and a BA in Math and CS from Wellesley College.

- Rahul Gulati, Qualcomm, US

Title: Comprehending Functional Safety in Autonomous Driving Systems

Abstract : The talk will provide an introduction to functional safety and discuss how the upcoming standards are trying to address the functional safety related concerns in automated driving systems. Plus, a discussion that would cover some of the challenges on the road ahead towards highly automated driving.